8.2.3 Reinforcement learning

🌳 Tip 🌳

To refresh your knowledge on deep RL, checkout Spinning Up in Deep RL (OpenAI)

- [E] Explain the explore vs exploit tradeoff with examples.

- [E] How would a finite or infinite horizon affect our algorithms?

- [E] Why do we need the discount term for objective functions?

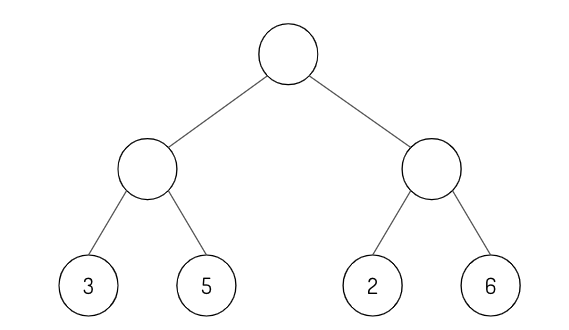

[E] Fill in the empty circles using the minimax algorithm.

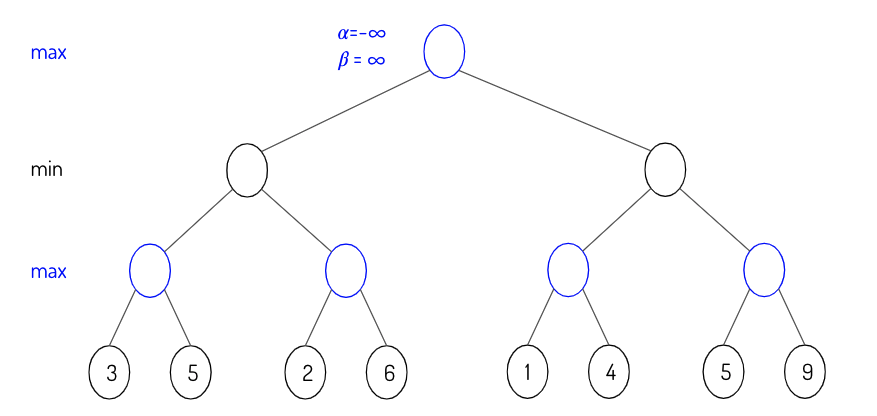

[M] Fill in the alpha and beta values as you traverse the minimax tree from left to right.

[E] Given a policy, derive the reward function.

- [M] Pros and cons of on-policy vs. off-policy.

- [M] What’s the difference between model-based and model-free? Which one is more data-efficient?