8.1.2 Questions

- [E] What are the basic assumptions to be made for linear regression?

- [E] What happens if we don’t apply feature scaling to logistic regression?

- [E] What are the algorithms you’d use when developing the prototype of a fraud detection model?

- Feature selection.

- [E] Why do we use feature selection?

- [M] What are some of the algorithms for feature selection? Pros and cons of each.

k-means clustering.

- [E] How would you choose the value of k?

- [E] If the labels are known, how would you evaluate the performance of your k-means clustering algorithm?

- [M] How would you do it if the labels aren’t known?

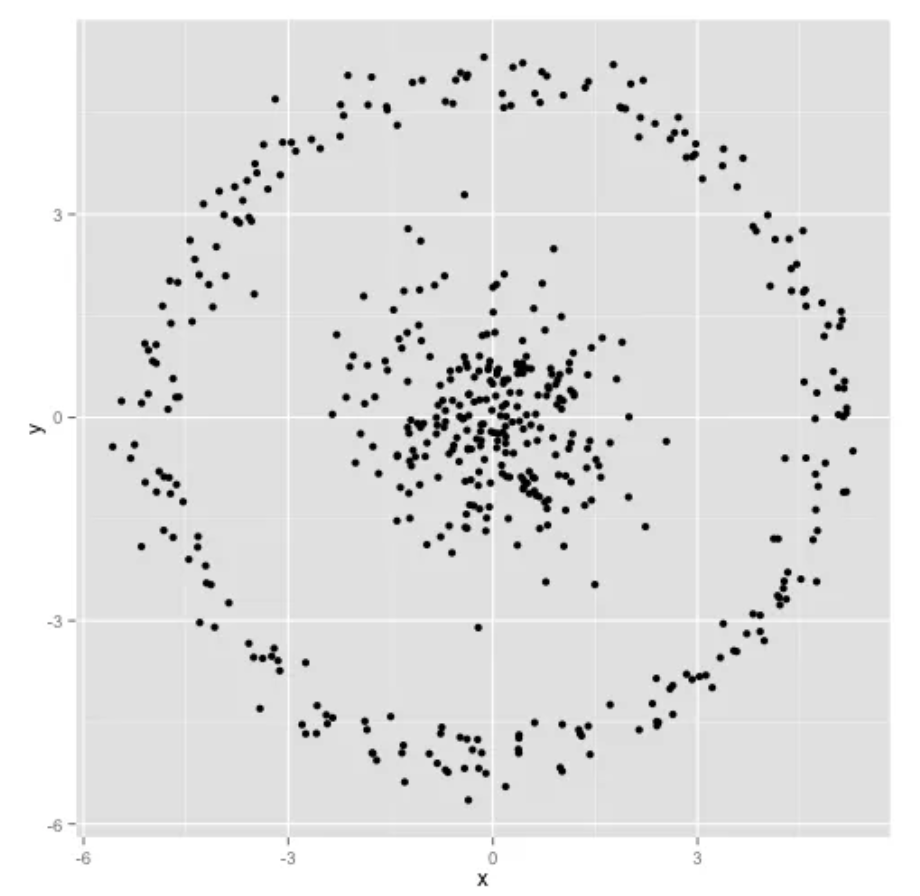

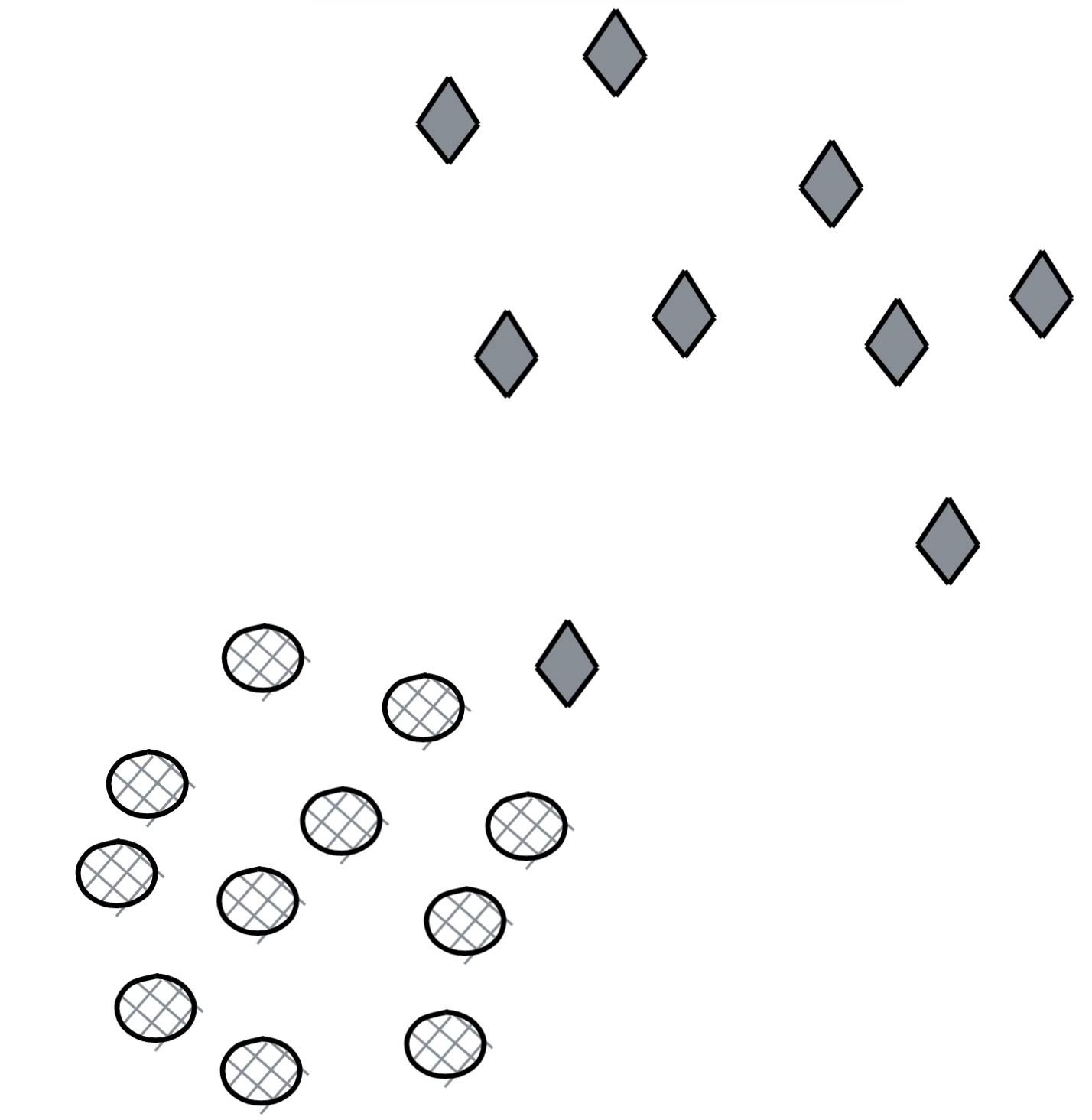

[H] Given the following dataset, can you predict how K-means clustering works on it? Explain.

- k-nearest neighbor classification.

- [E] How would you choose the value of k?

- [E] What happens when you increase or decrease the value of k?

- [M] How does the value of k impact the bias and variance?

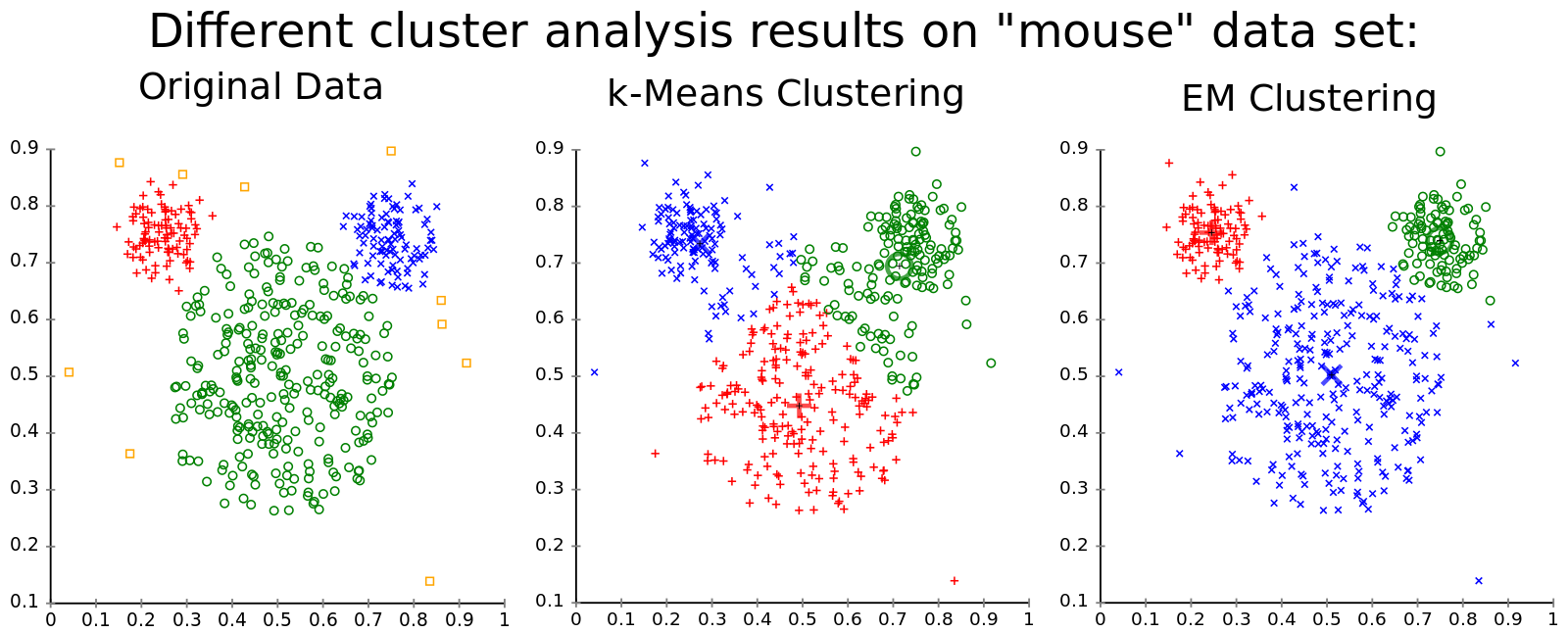

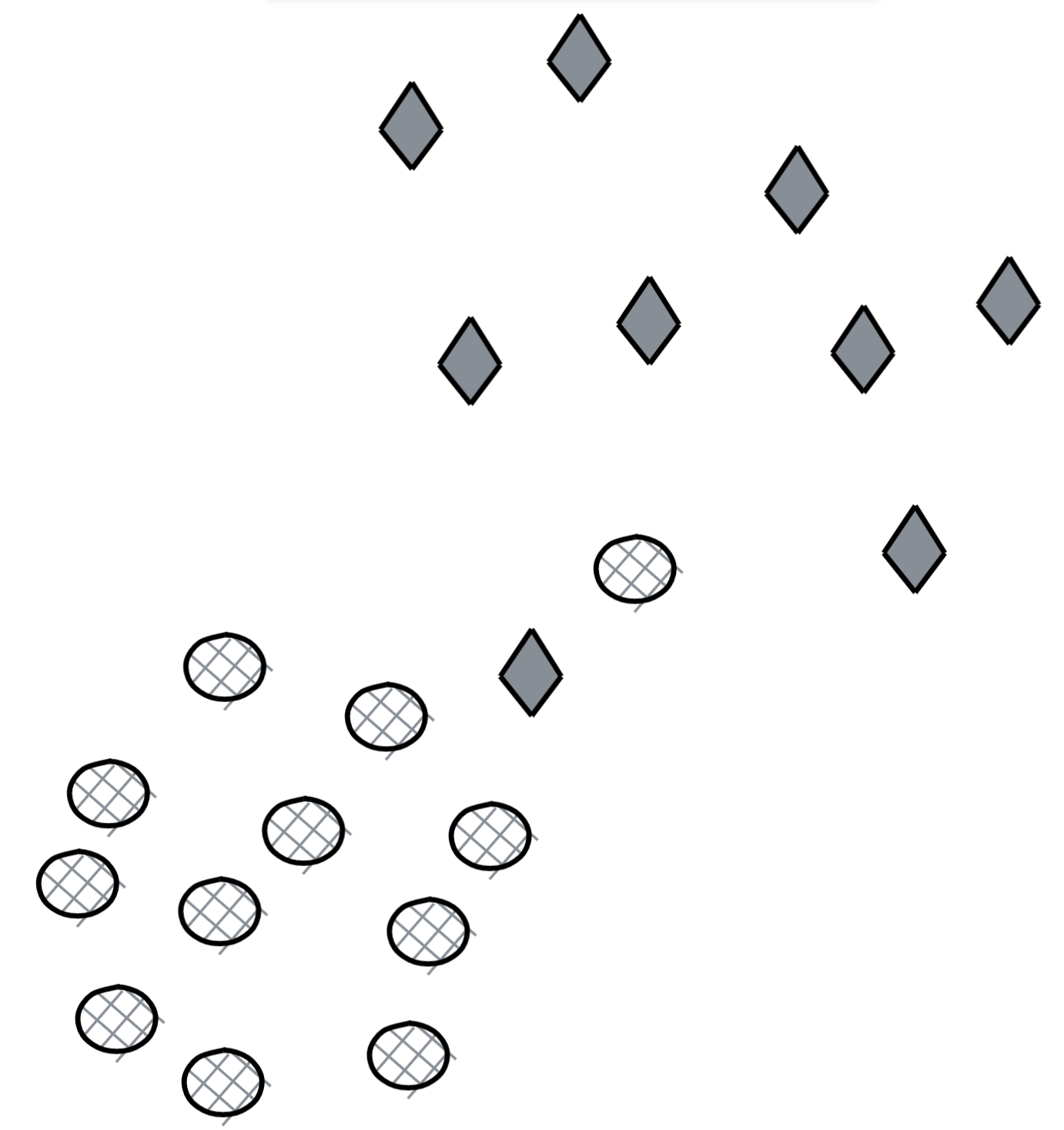

k-means and GMM are both powerful clustering algorithms.

- [M] Compare the two.

[M] When would you choose one over another?

Hint: Here’s an example of how K-means and GMM algorithms perform on the artificial mouse dataset.

Image from Mohamad Ghassany’s course on Machine Learning

- Bagging and boosting are two popular ensembling methods. Random forest is a bagging example while XGBoost is a boosting example.

- [M] What are some of the fundamental differences between bagging and boosting algorithms?

- [M] How are they used in deep learning?

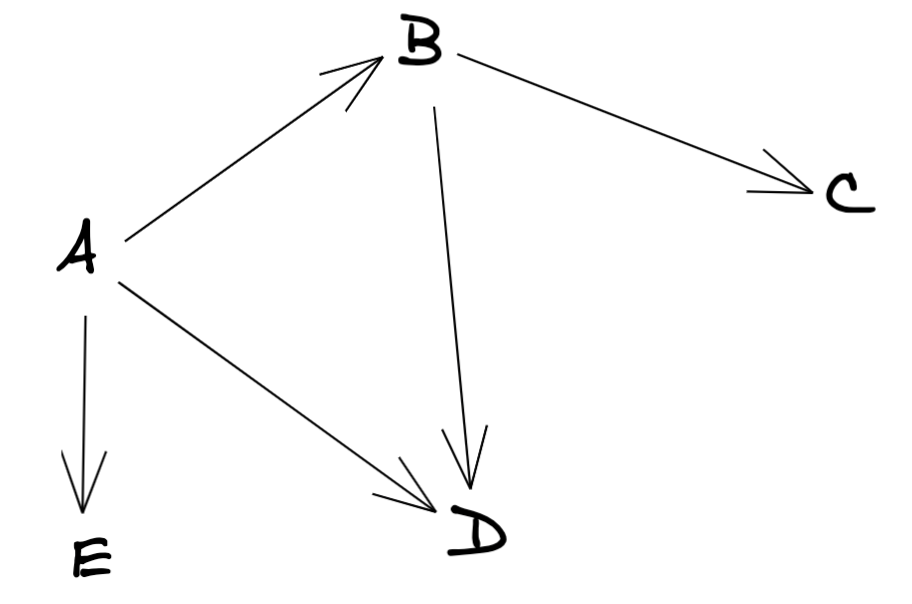

- Given this directed graph.

- [E] Construct its adjacency matrix.

- [E] How would this matrix change if the graph is now undirected?

- [M] What can you say about the adjacency matrices of two isomorphic graphs?

- Imagine we build a user-item collaborative filtering system to recommend to each user items similar to the items they’ve bought before.

- [M] You can build either a user-item matrix or an item-item matrix. What are the pros and cons of each approach?

- [E] How would you handle a new user who hasn’t made any purchases in the past?

- [E] Is feature scaling necessary for kernel methods?

Naive Bayes classifier.

- [E] How is Naive Bayes classifier naive?

[M] Let’s try to construct a Naive Bayes classifier to classify whether a tweet has a positive or negative sentiment. We have four training samples:

Tweet Label This makes me so upset Negative This puppy makes me happy Positive Look at this happy hamster Positive No hamsters allowed in my house Negative

According to your classifier, what's sentiment of the sentence

The hamster is upset with the puppy?Two popular algorithms for winning Kaggle solutions are Light GBM and XGBoost. They are both gradient boosting algorithms.

- [E] What is gradient boosting?

- [M] What problems is gradient boosting good for?

SVM.

- [E] What’s linear separation? Why is it desirable when we use SVM?

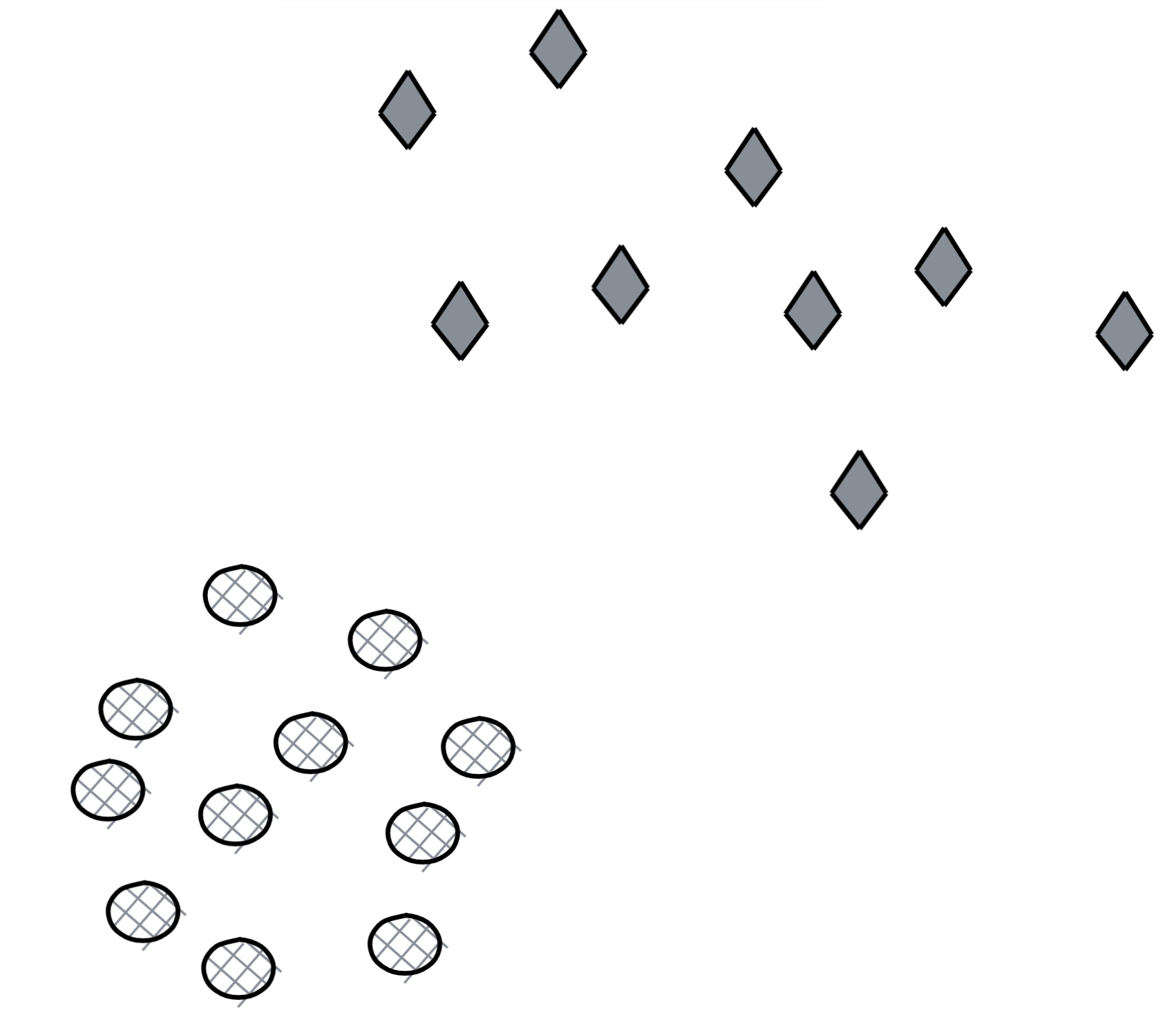

[M] How well would vanilla SVM work on this dataset?

[M] How well would vanilla SVM work on this dataset?

[M] How well would vanilla SVM work on this dataset?

This book was created by Chip Huyen with the help of wonderful friends. For feedback, errata, and suggestions, the author can be reached here. Copyright ©2021 Chip Huyen.